For example, if we have a list of 10,000 words of English and hash brown egg casserole want to check if a given word is in the list, it would be inefficient to successively compare the word with all 10,000 items until we find a match. Even if the list of words are lexicographically sorted, like in a dictionary, you will still need some time to find the word you are looking for.

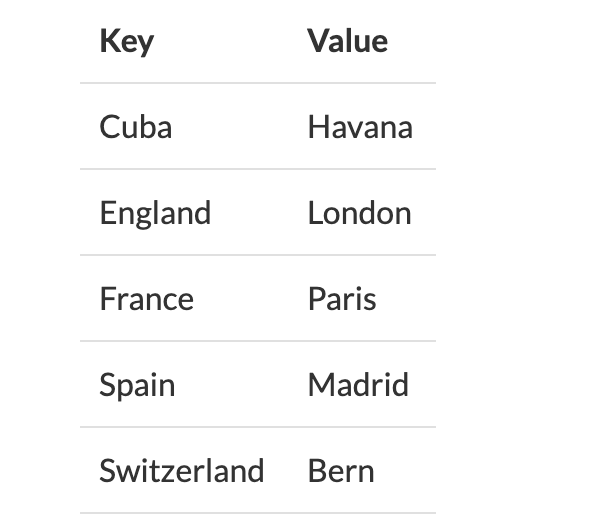

Hashing is a technique to make things more efficient by effectively narrowing down the search at the outset. Hashing means using some function or algorithm to map object data to some representative integer value. Generally, these hash codes are used to generate an index, at which the value is stored. The key, which is used to identify the data, is given as an input to the hashing function. The hash code, which is an integer, is then mapped to the fixed size we have. Hash tables have to support 3 functions. So let’s say we want to store the data in Table in the map.

And let us suppose that our hash function is to simply take the length of the string. For simplicity, we will have two arrays: one for our keys and one for the values. So we store Cuba in the 4th position in the keys array, and Havana in the 4th index of the values array etc. Now, in this specific example things work quite well. Our array needs to be big enough to accommodate the longest string, but in this case that’s only 11 slots. We do waste a bit of space because, for example, there are no 1-letter keys in our data, nor keys between 8 and 10 letters.

But in this case, the wasted space isn’t so bad either. But, what do we do if our dataset has a string which has more than 11 characters? Since the index 5 is already occupied, we have to make a call on what to do with it. If our dataset had a string with thousand characters, and you make an array of thousand indices to store the data, it would result in a wastage of space. If our keys were random words from English, where there are so many words with same length, using length as a hashing function would be fairly useless. In our example, when we add India to the dataset, it is appended to the linked list stored at the index 5, then our table would look like this. To find an item we first go to the bucket and then compare keys.

This is a popular method, and if a list of links is used the hash never fills up. The problem with separate chaining is that the data structure can grow with out bounds. If a collision occurs then we look for availability in the next spot generated by an algorithm. Open Addressing is generally used where storage space is a restricted, i.